As a developer, I have often found myself grappling with the challenges of scaling applications to meet increasing demands. Node.js, with its non-blocking architecture, has been a game-changer in the world of server-side JavaScript. However, one of the inherent limitations of Node.js is its single-threaded nature.

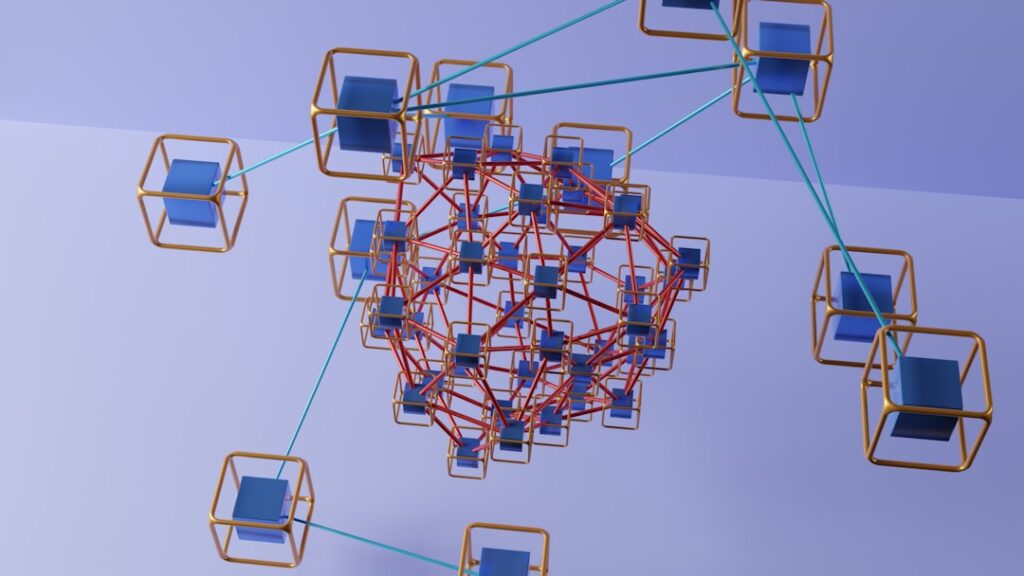

This is where the Node.js Cluster Module comes into play. The Cluster Module allows me to create multiple child processes that can share the same server port, effectively enabling my application to handle more requests simultaneously. The beauty of the Cluster Module lies in its simplicity and effectiveness.

By leveraging the capabilities of multi-core systems, I can distribute incoming requests across multiple instances of my application. This not only enhances performance but also improves fault tolerance. If one of the child processes crashes, the master process can easily spawn a new one to take its place, ensuring that my application remains resilient and responsive.

In this article, I will delve deeper into the intricacies of the Node.js Cluster Module, exploring its performance scaling capabilities, implementation strategies, and best practices.

When I think about performance scaling in Node.js, I often reflect on the importance of handling increased loads without compromising on speed or efficiency. Node.js operates on a single-threaded event loop, which means that it can only process one request at a time per thread. While this model works exceptionally well for I/O-bound tasks, it can become a bottleneck when faced with CPU-intensive operations.

This is where understanding performance scaling becomes crucial. To effectively scale my Node.js applications, I need to consider both vertical and horizontal scaling strategies. Vertical scaling involves enhancing the existing server’s resources—such as CPU and memory—to handle more requests.

However, this approach has its limits and can become costly. On the other hand, horizontal scaling allows me to distribute the load across multiple servers or processes. The Cluster Module facilitates this horizontal scaling by enabling me to spawn multiple instances of my application on a single machine, thus maximizing resource utilization and improving overall performance.

Implementing the Node.js Cluster Module for load balancing has been one of the most rewarding experiences in my development journey. The process begins with requiring the cluster module and checking if the current process is the master or a worker. If it’s the master, I can easily fork multiple worker processes based on the number of CPU cores available on my machine.

This simple yet powerful approach allows me to distribute incoming requests evenly across all workers. Once I have set up my workers, I can utilize the built-in load balancing mechanism provided by Node.js. The operating system’s kernel takes care of distributing incoming connections among the available worker processes.

This means that as requests come in, they are automatically routed to the least busy worker, ensuring that no single instance becomes overwhelmed. By implementing this load balancing strategy, I have noticed a significant improvement in my application’s responsiveness and throughput.

In today’s computing landscape, multi-core processors are ubiquitous, and harnessing their power is essential for optimal application performance. The Node.js Cluster Module allows me to take full advantage of these multi-core systems by enabling me to run multiple instances of my application concurrently. Each worker process runs in its own thread and can handle requests independently, which means that I can effectively utilize all available CPU cores.

When I deploy my application using the Cluster Module on a multi-core processor, I often see a dramatic increase in performance metrics such as request handling time and throughput. Each core can handle its own set of requests without waiting for others to finish processing. This parallelism not only speeds up response times but also enhances user experience by allowing my application to serve more users simultaneously without degradation in performance.

Monitoring and managing my Node.js Cluster Module setup is crucial for maintaining optimal performance and ensuring reliability. One of the first steps I take is to implement logging within each worker process. By capturing important metrics such as request counts, error rates, and response times, I can gain valuable insights into how each instance is performing.

Tools like PM2 or Node’s built-in process manager help me keep track of these metrics in real-time. Additionally, I find it essential to implement health checks for each worker process. If a worker becomes unresponsive or crashes, I want to be notified immediately so that I can take corrective action.

The Cluster Module allows me to listen for events such as ‘exit’ or ‘error’ on each worker process, enabling me to restart them automatically if needed. This proactive approach ensures that my application remains robust and minimizes downtime.

Over time, I have learned several best practices for effectively using the Node.js Cluster Module in my applications. First and foremost, it’s important to ensure that my application is stateless whenever possible. Since each worker process operates independently, maintaining shared state can lead to inconsistencies and unexpected behavior.

By utilizing external storage solutions like Redis or databases for state management, I can avoid these pitfalls. Another best practice is to limit the number of worker processes based on the available CPU cores. While it may be tempting to spawn as many workers as possible, doing so can lead to resource contention and diminished returns.

A good rule of thumb is to match the number of workers to the number of CPU cores available on the server. This approach allows me to maximize resource utilization while maintaining optimal performance.

Despite its many advantages, using the Node.js Cluster Module can sometimes lead to challenges that require troubleshooting. One common issue I encounter is related to inter-process communication (IPC). Since each worker runs in its own context, sharing data between them can be tricky.

To address this, I often rely on message passing through IPC channels provided by the Cluster Module. By sending messages between workers and the master process, I can synchronize tasks and share necessary information without running into conflicts. Another issue that may arise is related to error handling within worker processes.

If an unhandled exception occurs in a worker, it will crash without affecting other workers; however, this can lead to lost requests if not managed properly. To mitigate this risk, I implement robust error handling within each worker process and ensure that any critical errors are logged and reported back to the master process for further action.

As I look toward the future of the Node.js Cluster Module, I am excited about potential developments that could further enhance its capabilities. One area ripe for improvement is better integration with cloud-native architectures and containerization technologies like Docker and Kubernetes. As more applications move toward microservices architectures, having seamless support for clustering in these environments will be crucial for scalability and resilience.

Additionally, advancements in monitoring tools specifically designed for clustered environments could provide deeper insights into performance metrics across multiple instances. Enhanced visualization tools could help developers like me quickly identify bottlenecks or issues within our clustered applications, allowing us to make informed decisions about scaling and resource allocation. In conclusion, the Node.js Cluster Module has proven to be an invaluable tool in my development toolkit.

By understanding its capabilities and implementing best practices, I have been able to build scalable applications that perform well under pressure while maintaining reliability and responsiveness. As technology continues to evolve, I look forward to seeing how the Cluster Module adapts and grows alongside emerging trends in software development.

For developers looking to enhance the performance of their Node.js applications, the Node.js Cluster Module is a powerful tool for scaling applications across multiple CPU cores. By distributing incoming connections across several worker processes, it helps in maximizing the utilization of system resources. For those interested in further optimizing their server management and performance, an article on migrating to another server using CyberPanel might be of interest. This article provides insights into efficient server management, which can complement the performance scaling achieved through the Node.js Cluster Module.

FAQs

What is the Node.js Cluster Module?

The Node.js Cluster module allows for the easy creation of child processes to handle the load of a Node.js application. It enables the distribution of incoming connections among the child processes, thereby improving the performance and scalability of the application.

How does the Node.js Cluster Module improve performance scaling?

By creating multiple child processes, the Node.js Cluster module allows for better utilization of multi-core systems. Each child process can handle a portion of the incoming requests, leading to improved performance and scalability of the application.

What are the benefits of using the Node.js Cluster Module?

Some benefits of using the Node.js Cluster module include improved performance, better utilization of hardware resources, and enhanced scalability of Node.js applications. It also helps in handling a large number of concurrent connections efficiently.

How does the Node.js Cluster Module handle incoming requests?

The Node.js Cluster module uses a round-robin algorithm to distribute incoming connections among the child processes. This ensures that the load is evenly distributed and that each child process gets a fair share of the incoming requests.

Are there any limitations or considerations when using the Node.js Cluster Module?

While the Node.js Cluster module can improve performance and scalability, it’s important to note that it does not automatically make an application fully scalable. Developers need to carefully design their applications to take full advantage of the module and consider potential issues such as shared state and inter-process communication.